Two weeks ago I reported on the first part of the SBOM Challenge at the S4x23 cybersecurity conference in Miami, Florida. The Day 1 goal was for each team to create an accurate SBOM for three target artifacts and then identify known vulnerabilities in the components in each SBOM. Today’s blog reports on Day 2 of the S4x23 SBOM Challenge, where Idaho National Laboratory (INL) provided INL-generated SBOMs for each artifact for the participants to review and use as they saw fit.

SBOM In(di)gestion

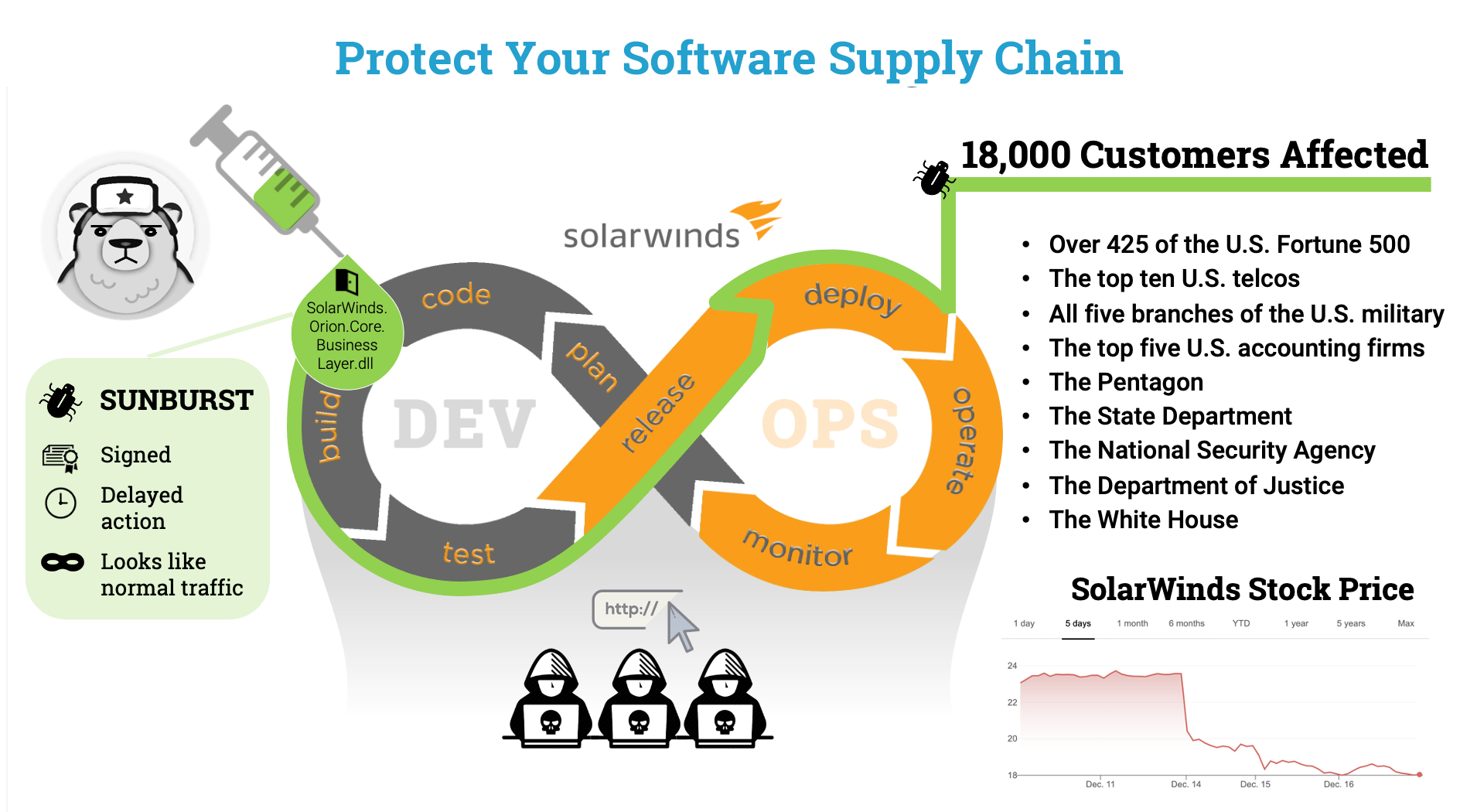

Having to ingest a 3rd-party SBOM was an interesting challenge for us, as our focus for the last half decade has been SBOM creation and enrichment. This focus was born out of necessity: there just aren’t many SBOMs available to ingest (yet), but current geopolitical tensions demand visibility into the software supply chain now — not in a few years. We currently have SBOMs for over 45 million IT and OT products (and the list grows daily), so we’ve tended to use those rather than beg for other people’s SBOMs.

That said, we know that some vendors will soon be providing SBOMs to their customers, so we have been working on SBOM ingestion for the past year. We certainly had some ideas on what needs to happen to make ingested SBOMs actually useful to asset owners — it is not just a matter of reading a JSON file and putting it in a database. At least, it isn’t if you want to actually make effective use of the SBOM to defend your control system.

When we received the INL SBOMs, the first thing we did was compare them to our SBOMs. A few things became immediately apparent. To begin with, INL generated each of their SBOMs using a different tool which resulted in wildly different SBOMs. Let’s take a look…

SBOM from Source Code via Black Duck

The INL SBOM identified Black Duck as the tool used to generate their SBOM for the Windows-based artifact. Having collaborated with Michael White from Black Duck in previous SPDX Docfests and NTIA PlugFests, I know that Black Duck uses source code at build time to generate their SBOMs. This is different from how FACT generates an SBOM: FACT performs binary analysis to decompose and identify the components existing within a binary.

The INL SBOM identified Black Duck as the tool used to generate their SBOM for the Windows-based artifact. Having collaborated with Michael White from Black Duck in previous SPDX Docfests and NTIA PlugFests, I know that Black Duck uses source code at build time to generate their SBOMs. This is different from how FACT generates an SBOM: FACT performs binary analysis to decompose and identify the components existing within a binary.

SBOMs made from binary analysis can be significantly different than those generated from source code or at build time. For example, the Black Duck SBOM includes only 18 files, whereas the FACT SBOM reports over 3376 files.

Now, if you’re looking at that discrepancy and thinking, “Wow, how did Black Duck miss so many files?” — I want to stop you there. SBOMs made from different methods have different strengths. One of the specific strengths of binary analysis SBOMs is that they are able to list all the binary blobs within an artifact, not just the open source packages. FACT’s library of OS-agnostic unpackers is very robust and can find all sorts of interesting things hidden in a file. On the other hand, source code SBOM generation generally won’t report on all those interesting files because they don’t get created until after compilation. While it certainly makes for a simpler SBOM, the loss of data means you may miss interesting/obscured subcomponents.

There was much more consistency in package identification between our SBOM and INL’s SBOM, with their SBOM having 10 packages and FACT’s SBOM having 30 unique packages. The packages we agreed on included LeadTools, Microsoft binaries, and Rogue Wave products.

However, we noticed one important difference: the INL SBOM reported Newtonsoft.Json.dll as being in the artifact, but we could find no evidence of it in either files or packages. This DLL turned out to be another false positive; we later learned that its presence in the INL SBOM was due to internal test code in the build pipeline. Newtonsoft.Json.dll was never included in the product actually deployed by the end user so shouldn’t be listed in the supplied SBOM. This is a great example of how SBOMs created from source and SBOMs created from binaries both provide value. One isn’t superior to the other.

An other challenges in comparing SBOMs is the definition of a package. If you read the SPDX specification, you’ll see that the definition of a “package” is a deliberately abstract concept

A package refers to any unit of content that can be associated with a distribution of software.

The SPDX goes on to give some examples:

Any of the following non-limiting examples may be (but are not required to be) represented in SPDX as a package:

- a tarball, zip file or other archive

- a directory or sub-directory

- a separately distributed piece of software which another Package or File uses or depends upon (e.g., a Python package, a Go module, ...)

- a container image, and/or each image layer within a container image

- a collection of one or more sub-packages

- a Git repository snapshot from a particular point in time

So basically a package can be whatever the SBOM creator wants it to be. For example, some SBOM producers use the word “package” to mean only open source software, leaving any proprietary software out of the SBOM. Since proprietary software can certainly be from a 3rd-party source, we don’t think this is a good idea.

To further understand why the definition of a package can significantly impact the package count in an SBOM, let’s take a look inside this artifact. The figure below shows a portion of the SBOM in a graphical format. The column on the left shows the file name and the column on the right shows the package that each is part of (as we see it). You’ll notice that we have associated the 20 files with a single LEADTOOLS package. However, one could also argue that each of these DLLs is a separate package as the LEADTOOLS developers could have easily included other packages inside a DLL. You’ll also notice that we include OSIsoft’s proprietary packages and not just open source packages in our SBOM.

As I mentioned in my previous blog post, our focus is producing very high-quality results, so the FACT platform conservatively marked a number of files as subcomponents rather than packages. FACT takes care to perform package recognition not only on filenames but also on a host of data sources and methods, since incorrectly identifying packages can result in false-positive vulnerability assignments. And believe me, false positives are the curse of the vulnerability detection world.

Such discrepancies highlight an important issue that SBOM users need to be aware of: the potential for conflicting SBOMs and how to reconcile them. It is unlikely there will be a perfect “canonical SBOM” for a decade, yet every SBOM provides valuable information. Think of differently produced SBOMs like differently produced medical images. Ultrasounds, X-rays, CT scans, and MRIs all produce views of the inside the body, but each shows different things. It would be a poor doctor who looked at only MRIs and ignored the other data sources. Rather, like a skilled doctor who uses all the data available, we think it is wise to understand the different SBOM production formats and leverage all the information rather than dismissing one SBOM type in favor of another.

Nothing to See Here Folks

![]() We didn’t perform an SBOM comparison on the “bare-metal” binary from the TPU 2000R Transformer Protection Unit as this golden oldie was an oddity. Our focus is on SBOM creation and vulnerability management at scale rather than on edge cases rarely seen by most company’s OT groups. And frankly, we disagree that the items listed in the INL SBOM belong in an SBOM at all — they were header files pointing to the C Standard Library. The US government’s objective for creating the SBOM initiative has been to get visibility into the 3rd-party supply chain, not to reverse-engineer proprietary software.

We didn’t perform an SBOM comparison on the “bare-metal” binary from the TPU 2000R Transformer Protection Unit as this golden oldie was an oddity. Our focus is on SBOM creation and vulnerability management at scale rather than on edge cases rarely seen by most company’s OT groups. And frankly, we disagree that the items listed in the INL SBOM belong in an SBOM at all — they were header files pointing to the C Standard Library. The US government’s objective for creating the SBOM initiative has been to get visibility into the 3rd-party supply chain, not to reverse-engineer proprietary software.

Another SBOM Probably from Source Code

INL didn’t specify how the SBOM for the embedded Linux artifact was created, but based on only one file being listed in the SBOM, this SBOM was likely also source code based. INL’s SBOM listed many more packages than FACT listed; however, this goes back again to that key difference between source code and binary based SBOMs I mentioned earlier. Identifying “unique” packages with a binary analysis SBOM is more ambiguous at scale. The flipside is that binary analysis SBOMs are more robust since they can still be attempted on any binary, even without source code available. Also, they have a much stronger attestation since there is never any doubt that the SBOM in hand applies to the binary in hand.

Summary

This step of the SBOM Challenge proved to be an opportunity to both learn and educate. As I said, ingestion has not been our focus, so the comparisons we performed have informed our development efforts. It also provided us the opportunity to articulate the importance of avoiding false positives when determining how to assign packages. The challenge made very clear that different SBOM creation methods will produce different SBOMs, and all are useful.

One interesting aspect of this day was the team’s chance to work with one of the other challenge participants, Cybeats, who do focus on SBOM ingestion. Cybeats themselves don’t create SBOMs but rather provide SBOM management tools. They ingested both the SBOMs produced by INL and the ones we provided. I think it was valuable to all the attendees to see how the various supply chain solutions can integrate well.

Giving our users the biggest, most accurate view of their supply chain risks by including data from as many sources as possible (including information produced by our so-called competitors) has been Eric’s vision from the day he founded aDolus. Making our software supply chain secure will only be possible if we work together.

Stay tuned for Part 3 where I’ll go into more detail on VEX document ingestion.

And if you would like to see how FACT generates SBOMs, as always, we'd be happy to show you!

Derek Kruszewski

Derek Kruszewski is a data scientist and mechanical engineer with a passion for automating control systems using AI, particularly at oil and gas facilities. Derek leads the vulnerability management innovations at aDolus and (with his extensive yoga background) can fix your back if it hurts.

Stay up to date

Browse Posts

- May 2024

- February 2024

- December 2023

- October 2023

- April 2023

- March 2023

- February 2023

- October 2022

- April 2022

- February 2022

- December 2021

- November 2021

- August 2021

- July 2021

- June 2021

- May 2021

- February 2021

- January 2021

- December 2020

- September 2020

- August 2020

- July 2020

- May 2020

- April 2020

- January 2020

- October 2019

- September 2019

- November 2018

- September 2018

- May 2018

Browse by topics

- Supply Chain Management (16)

- SBOM (15)

- Vulnerability Tracking (15)

- #supplychainsecurity (10)

- Regulatory Requirements (10)

- VEX (8)

- EO14028 (6)

- ICS/IoT Upgrade Management (6)

- malware (6)

- ICS (5)

- vulnerability disclosure (5)

- 3rd Party Components (4)

- Partnership (4)

- Press-release (4)

- #S4 (3)

- Software Validation (3)

- hacking (3)

- industrial control system (3)

- Code Signing (2)

- Legislation (2)

- chain of trust (2)

- #nvbc2020 (1)

- DoD CMMC (1)

- Dragonfly (1)

- Havex (1)

- Log4Shell (1)

- Log4j (1)

- Trojan (1)

- USB (1)

- Uncategorized (1)

- energy (1)

- medical (1)

- password strength (1)

- pharmaceutical (1)

Post a comment